How to live inside a docker container?

This is a story of how I learned to stop worrying and love the bomb. Well, it’s just about as iconic. Lone developer turns to Docker to set up a clean development environment as seamlessly as possible, including the bells and whistles of SSH agent forwarding. I’m pretty sure some of the folks on Docker HQ might have a small panic attack reading this one. Alas, I’m not a cardiac health professional.

I just realized this was april fools, let me asure you - I’m serious.

So let me give you a short bullet list of what we will try to do:

- We will set up a persistent development environment with docker,

- We will enable using your SSH agent from within docker,

- We will enable using the docker from your docker container,

- We will set it up a choice to login directly to the container

Everything here is… unorthodox. But very cool.

Persisting your changes

Docker is a bit tricky in the sense when you run a container, you either docker start a stopped container

or you docker run an image. You can’t run a container that already exists under the same name, and you can’t

docker exec a container with new options like -v for volume mounts or -e for environment as with docker run.

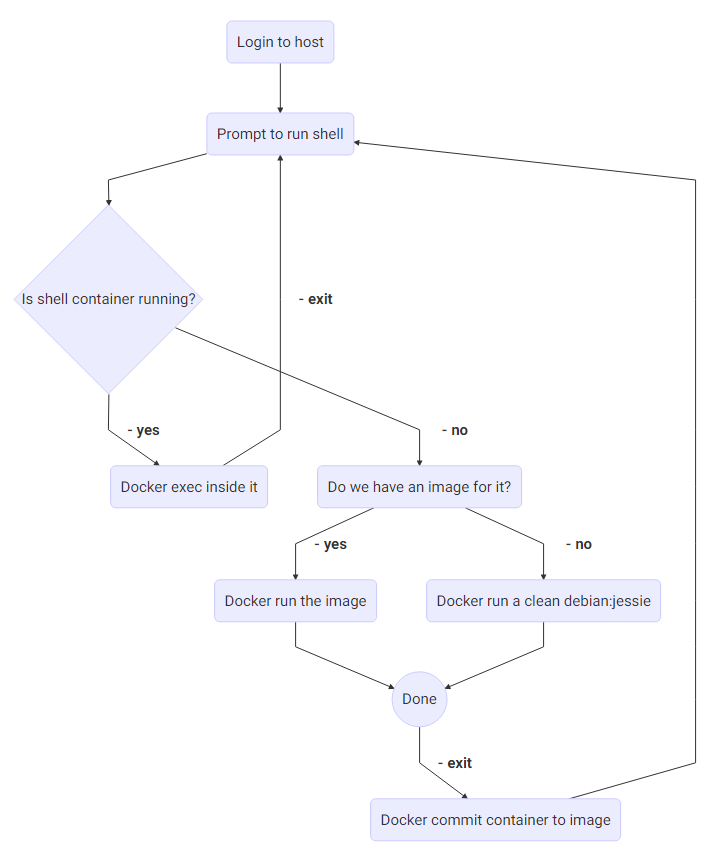

So the logic I wanted is a bit as follows:

To persist the changes in the docker container, we have to detect the ID of the container, we have to commit this container to an image, and then delete the container. The image will be the building block for the next time when you start your shell - if you need to restart your environment from scratch, all you should do is delete the saved image.

Detecting if a container runs

First, we need to name our container.

NAME="shell-$LOGNAME"

We will name our container shell-$LOGNAME. $LOGNAME is the variable which contains the user name

which logins via SSH. I’ve seen people set this variable with authorized_keys feature of SSH, to

differentiate between users based on ssh key. I use the same principle, so even if we share a root

user for access to a machine, we have unique $LOGNAME and environments.

Note: I guess this [root access] is not such a good thing, but then again, adding users into the docker group, or giving them full sudo privileges seems to be the same kind of insecure. We are professionals, and the machines we use this on are dedicated use machines and not some sort of shared semi-public cluster. Using root logins with ssh keys as policy is every bit as secure as using local users with ssh keys and open sudo access.

When we want to get a shell, we should check if there’s a running container waiting for us.

function give_shell {

NAME=$1

RUNNING=$(docker inspect --format="{{ .State.Running }}" $NAME 2> /dev/null)

if [[ "$RUNNING" == "true" ]]; then

# attach a shell with docker exec

docker_attach_shell $NAME

else

# ...

This is how we start out with getting a docker shell. We use the docker inspect command to get

the state of a container $NAME. If the container is found and running (response equals “true”),

then we will attach a shell with docker_attach_shell function.

function docker_attach_shell {

CONTAINER=$1

echo "Attaching a bash session to $CONTAINER..."

docker exec -it $CONTAINER /bin/bash

}

What we accomplish here is that we can use the same container with every login. This also means that when you start a container and pass the SSH agent information to it, every other session you attach can use the same SSH agent. It opens a few doors.

Checking if we have an image to use

Checking to see if we have a shell-$LOGNAME image on the system lets us use the image where

we left off last time. As you can see in the code - starting up a container is exactly the same

with the image existing or not - if the image doesn’t exist, it uses ‘debian:jessie’ to start it.

FRESH_START="debian:jessie"

if [[ "$(docker images -q $NAME 2> /dev/null)" == "" ]]; then

# start a clean container

echo "Spawning $NAME from scratch"

docker_run_shell $NAME $FRESH_START

else

# start from an existing image

echo "Starting $NAME from saved copy"

docker_run_shell $NAME $NAME

fi

Using docker from within a docker container

This might not be the smartest idea if you’re concerned about security. You’re basically giving anyone with a docker shell full access to your docker host. This means they can run anything, and more importantly also delete anything. Or fill your disk with useless images and/or containers. But whatevs man, running docker from the host is cool!

Note: I will construct an

$ARGSvariable which will hold these arguments for docker run.

# docker needs a few libraries, but not all - essential libs here

BIND_LIBS=`ldd /usr/bin/docker | grep /lib/ | awk '{print $3}' | egrep '(apparmor|libseccomp|libdevmap|libsystemd-journal|libcgmanager.so.0|libnih.so.1|libnih-dbus.so.1|libdbus-1.so.3|libgcrypt.so.11)'`

ARGS=""

for LIB in $BIND_LIBS; do

ARGS="$ARGS -v $LIB:$LIB:ro"

done

It’s a bit complicated, in the sense of it might be a bit over the top of a beginner. To

simply break it down: We will be forwarding the docker binary for usage to the container.

The docker binary uses a number of libraries which must match, in order to work in the container.

The command ldd lists the libraries which the docker binary uses, the rest of the long

line reduces these libraries to only those which I isolated as essential (must-match).

tl;dr: this forwards all needed libraries to the container using -v volumes.

The last step in using docker from the container is to forward the docker binary, and the docker socket - this is the resource over which the docker binary communicates with the docker host.

ARGS="$ARGS -v /var/run/docker.sock:/run/docker.sock -v $(which docker):/bin/docker:ro"

Now you can run all your docker ps -a commands from within the container, and they

should work. If there’s some error, the library list needed for this might be adjusted.

Note: I’ve found out that

docker psworks like this, but I tested none of it. It doesn’t seem to use any kind of –privileged flags, and according to my logic the only problem/issue can be with some libraries which might be required on very different container base images. I’ve at least added:ro: options to mounts of libraries and binaries, so there’s less of a chance to mess up the host by upgrading any of the software inside the docker container.

Forwarding SSH agent to the container

When we are using docker run to start a container from an image, we can pass environment

variables with -e and volumes with -v options. SSH agent works via a socket file and

the location to this file is saved in the environment. We can forward both of these values

to the docker container, so we can use ssh inside it with our existing ssh-agent.

# pass ssh agent

if [ ! -z "$SSH_AUTH_SOCK" ]; then

ARGS="$ARGS -v $(dirname $SSH_AUTH_SOCK):$(dirname $SSH_AUTH_SOCK) -e SSH_AUTH_SOCK=$SSH_AUTH_SOCK"

fi

This is all you need to use your ssh agent from within a docker container.

Actually running the docker container

Well, this is the “meat” of everything:

docker run -it --net=party -w /root \

-h $NAME --name $NAME \

$ARGS \

-e LANG=$LANG -e LANGUAGE:$LANGUAGE \

$DOCKERFILE /bin/bash

echo "Commiting changes to $NAME, please wait..."

docker ps --filter="name=$NAME" -a -q | xargs -n1 -I {} docker commit {} $NAME

echo "Deleting container after us."

docker rm -f $NAME

echo "Done."

To explain it the shape of a bullet list:

- we attach it to a custom network (

--net=party) - we set a working directory to

/root - we set a name and a hostname

- we pass all the $ARGS

- we also pass LANG and LANGUAGE environment so the terminal will behave nicely

And when the container will exit, we will commit the changes into an image, and delete the container after us. This image will be used next time when the container starts.

Finishing touches

Well, I wouldn’t be me if I didn’t want to have options. I want to use docker shell, but on the other hand, some times you need to use the host machine too. Since we’re working in bash, I see no issue here, and I just wrote a quick menu, that will let me choose which option I want.

NAME="shell-$LOGNAME"

function show_menus {

echo "---------------------"

echo

echo "1. Enter docker shell ($NAME)"

echo "2. Drop to system shell (host: `uname -n`)"

echo

}

function read_options {

local choice

read -p "Enter choice: " choice

case $choice in

1) give_shell $NAME ;;

*) exit ;;

esac

}

It’s not the best looking, most colorful and feature packed … feature. But it does

it’s job. I saved the whole thing under one script, and added the script to /root/.bash_aliases.

This way, every time I log into the system, I’m prompted with a choice between the docker

environment, which I can mess with, or with the host system, which I try to keep clean.

What else?

It’s possible to improve this container by including an additional mount to the host, for

some persistent storage location - whatever you would want to keep “forever”, you can drop into

/mnt or some other location. All you need is an additional -v option to do this.

The complete script is available as a gist, so you can: use it, test it, share it, improve it. Any feedback is good feedback. A shameless plug: I’m happy if somebody would register on Digital Ocean to get $10 off and help pay my VPS bills. And there’s my Twitter if you want to give me a follow.

While I have you here...

It would be great if you buy one of my books:

- Go with Databases

- Advent of Go Microservices

- API Foundations in Go

- 12 Factor Apps with Docker and Go

For business inqueries, send me an email. I'm available for consultany/freelance work. See my page for more detail..

Want to stay up to date with new posts?

Stay up to date with new posts about Docker, Go, JavaScript and my thoughts on Technology. I post about twice per month, and notify you when I post. You can also follow me on my Twitter if you prefer.