Setting up a remote digital workspace

In a post by Ivan Voras on TopTal, he describes his remote digital workplace.

From it, I can only understand that he is a freelancer, which didn’t grow out of start-up garage mentality. Amazon, Google and Apple started in a garage, but it was a different time. And what people seem to forget - they were working, they weren’t setting up a work environment. Today you can lease a server and set it up in minutes and start working.

Amazon, Apple and Google don’t force their employees to use their own personal servers from home. If you’re a professional, you should separate your home and your profession. While it’s perfectly acceptable to have servers at home - the mindset that they are your work servers needs to go away. Every thing the author lists is a single point of failure.

Let’s list them very quicky:

- Electricity

- Internet uplink

- Devices (bridge, router, server)

- Storage

I might be slightly upset by the authors attitude towards explaining his setup as a viable option for a professional, as I tend to gravitate towards redundancy and availability as a core mantra with our clients. While applications in the beginning rarely are designed to be redundant (or scalable), it is literally the last possible single point of failure you can have, and also the hardest to fix. The only thing you can do at that point is to buy better, more performant hardware. If you keep growing, it buys the most valuable thing - time.

But back to my points:

Electricity in your home is not redundant. Power outages happen. If you have sensitive data or services in your home, an UPS might give you that hour or two to at least shutdown your server, preventing possible data loss. But it will not solve the basic requirement of remote work - connectivity.

Internet uplinks between you and your ISP are basically one long cable. The worst case scenario is that this cable will get accidentally shredded during some road works and might take days to repair. But internet outages are nothing unusual, I can’t say that any ISP will give you a 99.99% SLA, not even 99% or any. While it might be reliable most of the time, affordable, unmetered - it’s classified as best effort. It is possible in some countries to get a secondary uplink at a low or next to zero cost (2nd SIM option, cost of AP with SIM card attached to your phone carrier plan). System administration of such a redundancy is tricky at best - and this isn’t billable time.

The hardware - this is making me cringe. There are two infrastructural points on the network level, the bridge and the router. Each one of them can die at any point. If one dies - your “home” service goes with it. In the best case it can be replaced in the same day, if it dies in the morning, if the ISP has a replacement on hand, or if you have a replacement yourself or can readily buy it in a store close to you (access points for example). A bunch of if’s.

And then there’s the final piece of hardware, problematic on all counts: Raspberry PI.

- SD cards, even higher class cards at SHDC+ are slow

- Raspberry PI design (ports, power, architecture)

- No storage redundancy (RAID)

While having a Raspberry PI is fine for something like an environmental sensor logging data like temperature, or playing with the on board GPIO pins in your tinkering projects - it’s purpose is to be an educational tool. As such it is very limited to the number of devices that can be attached, and the power those devices can use before causing system instability. You might be able to attach one SSD to a USB port, or it might cause your RPi to reboot when you try to attach a WiFi dongle (as does mine). Good luck attaching two disks and trying to set up a RAID mirror!

The SD cards top out at just over 20MB/s, while the lowest tier server I have on Digital Ocean performs disk reads at 10 times that speed. While I wouldn’t suggest that you could use a cloud server for your NAS at home, I also wouldn’t suggest that you use RPi for anything work related. Using it for very specific applications is fine, but as soon as you use it for a general work machine, it will choke.

And my final point - the platform of RPi is ARM and not x86. While in practice a user can be ignorant of this, it means that some software is not compatible between the two, and will behave strangely with one or the other. A golden rule of thumb is to run your development and production systems on the same architecture. While it might be totally fine for server side scripting languages (like php, python, node), I have experienced issues with database servers and other common libraries.

In any case - if you use RPi for work, your work should be targeting RPi.

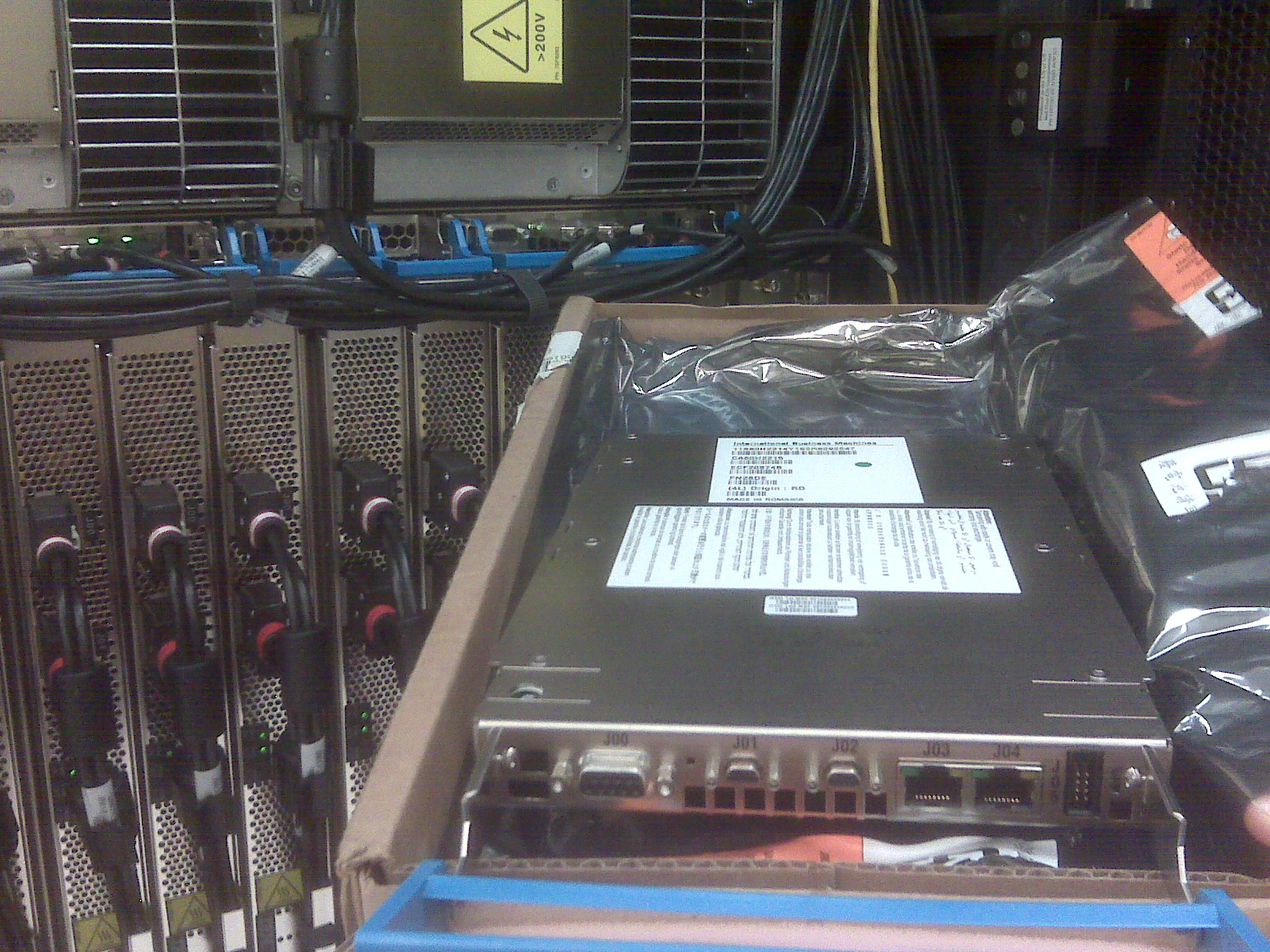

In a cruel twist of events, the author mentions that he leases the cheapest bare metal server for redundancy. In his case, that means 500GB of SSHD on a VIA 1-core server with 2GB of RAM which would blow RPi out of the water. Keep in mind, that this server is most likely in a datacenter with redundant uplinks, servers have redundant power supplies, basically a high level of fault tolerance which the RPi doesn’t have, at all. It can be accessed from anywhere, and there’s most likely a team of on-call engineers that will be dispatched to solve networking or hardware issues.

It’s still not the ideal choice for backups - Amazon S3 provides reduced redundancy storage at 99.99% reliability for about $12 a month. But, if you wanted it only for backups - you can have Amazon Glacier for $5 a month - if you need to retrieve a backup, just be prepared to wait a few hours. And pricing is pay as you go - unless you will fill up 500GB right away, your costs will be much lower. You can use s3fs or s3ql to mount the S3 bucket and use it much like a normal filesystem.

There’s one more critical thing here: “bare metal”. While I didn’t find any information of how the storage is attached, I did find a 4hr response time for hardware failures. Seeing how in higher support teirs they allow you to have access to the facility to plug in USB keys and similar into your leased hardware, I suspect that this disk is internal and doesn’t have any kind of RAID pair for redundancy. In comparison with Digital Ocean you get a lot less space (20GB for the same price tier), but you get weekly backups, and the whole thing is on a SAN, meaning there are SAN redundancies (fault tolerant multi-path I/O) and RAID redundancies on the actual storage array, ensuring data safety (RAID6? Hot spares,…).

So: if you allow a suggestion, that might fit you well for your work:

Get a VPS server which allows you to upgrade. Digital Ocean is a good option which is working out for me. Their starting tier is about $6/month with weekly backups, and you can UPGRADE your server if your needs get bigger. Without reinstallation, just a short reboot and you can have 4 cores and 8GB RAM. I am pretty sure that your development environment will not “grow” so fast. And there’s also an option of a temporary upgrade, which keeps your disk size but just increases CPU and RAM options (haven’t researched this one fully). The point is that disk can’t be decreased after it was expanded, but CPU/RAM is flexible. If Digital Ocean is not a fit for you, Google, Amazon and Microsoft all offer competing cloud computing services and you can choose a server that best fits your needs, and there are many lower cost providers as well, but your mileage may vary.

If you consider that your remote work will sometimes be in actual remote areas with spotty or no internet, I would suggest you buy a USB disk instead. I can recommend the WD Passport line, which I use in the office. At the time of writing you can get a WD Passport Ultimate disk for $60 for 1TB and $90 for 2TB. At such a price point, it’s worth considering, as the cost of a disk would be covered within a year, and you can add disks as you go. The only question is if you have the discipline for taking care of offline backups. Most people don’t.

If you’re a programmer, use Bitbucket for source control. You’re probably already using git, but opt for a free cloud service like Bitbucket instead of a hosted gitlab deployment. Or perhaps in addition to. If your server dissapears, your code will still be on Bitbucket, if you just remember to push. The service is reliable, and it’s made by Atlassian, the guys behind JIRA. It’s not going away any time soon.

And use Dropbox! I can’t say how much my work process depends on Dropbox in the last year. It’s not only for storing my files, but clients also share files with me. With an active client they prepare materials like graphics and content and save it on Dropbox, and since the folder is shared it it also available on the server - this opens up the possibility of CI (continous integration) jobs, which import the content into databases or mirror it to your staging environment for the client, or any other “from here to there” action. Automation is a good thing, and Dropbox is as friendly as it gets.

And if the cloud is not your thing, I hope you have a powerful laptop. With tools like Vagrant you can provision your development environment on your laptop. You can use Dropbox or Bitbucket to store your SQL schemas and non-source files needed for your development and import them as you’re provisioning your development environment. This environment can then travel with you completely offline, enabling you to work in the most remote places. Configuring backups might not be the easiest, but with a git workflow you can keep the important things stored at Bitbucket, which means if your laptop gets stolen, confiscated or accidentally run over with a buldozer - get a new laptop and start working within minutes.

If your remote digital work space requires hands-on intervention in case of issues,

it’s not remote, it’s adjacent, and somebody will have to be on site when issues

inevitably occur. If you’re trying to live the nomadic lifestyle and travel around

Thailand, which is a very

popular

destination for digital nomads these day, I’d suggest

you’d stick to the cloud, or take your RPi with you - but be prepared, these things

are basically toys, they are made to suck your time instead of just working. A VPS just works.

- update: Aparently you can go up to 64GB ram and 20 cores with Digital Ocean. Sweet.

While I have you here...

It would be great if you buy one of my books:

- Go with Databases

- Advent of Go Microservices

- API Foundations in Go

- 12 Factor Apps with Docker and Go

For business inqueries, send me an email. I'm available for consultany/freelance work. See my page for more detail..

Want to stay up to date with new posts?

Stay up to date with new posts about Docker, Go, JavaScript and my thoughts on Technology. I post about twice per month, and notify you when I post. You can also follow me on my Twitter if you prefer.